Grants AI MVP

An Academia case study

Grants AI emerged from an

internal AI hackathon.

The concept was greenlit for initial development with a focus on supporting our most engaged and high-value user segment: Academics.

How Academics apply for grants today

Academics are often responsible for funding all or some portion of their research. The proposal writing process can require 100+ hours of writing and compiling details needed for a single proposal. Then, multiply that by 6*, which is a lower-end estimate for how many grants a researcher might need to submit within a year.

*Based on a 2023 GrantStation survey where 64% of respondents applied to 6 or grants for the previous year

Finding relevant grants

Funding can come from grants offered by Federal, non-profit, or private funders. Finding these grants requires searching a number of individual databases and piecing together other resources like listservs, professional organizations, and word of mouth. In the words of one of our users, “I am always actively looking.”

Writing grant proposals

Applying for a funding opportunity requires a customized proposal that fits unique content and formatting requirements. For each submission, a researcher must source requirements, write the proposal, and supply supplementary information. The proposal writing process is broadly consistent, but requires detailed knowledge at each step.

How the Grants AI tool works

Our solution is built as two separate tools.

Opportunity Finder helps discover relevant funding opportunities.

Proposal Writer focuses on crafting a perfectly customized proposal.

The MVP Grant AI tool flow

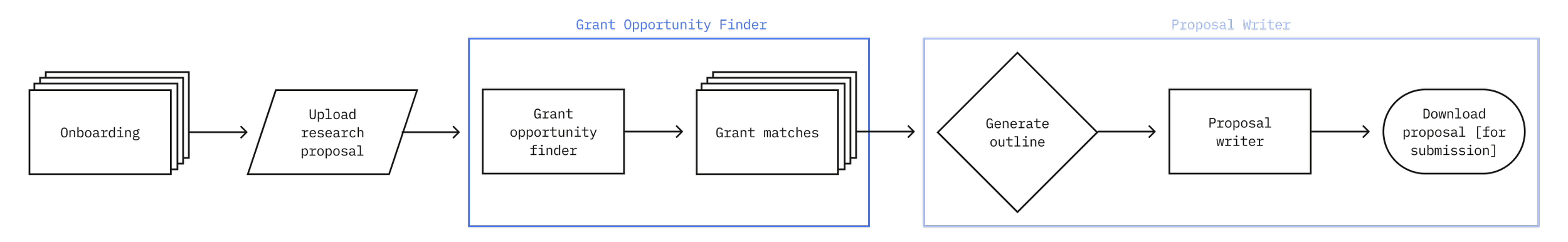

The product’s beta focused on creating an MVP end-to-end flow to test product-market fit.

AI is behind every stage of the tool. It powers the personalized search experience and streamlines the tedious aspects of the proposal writing process.

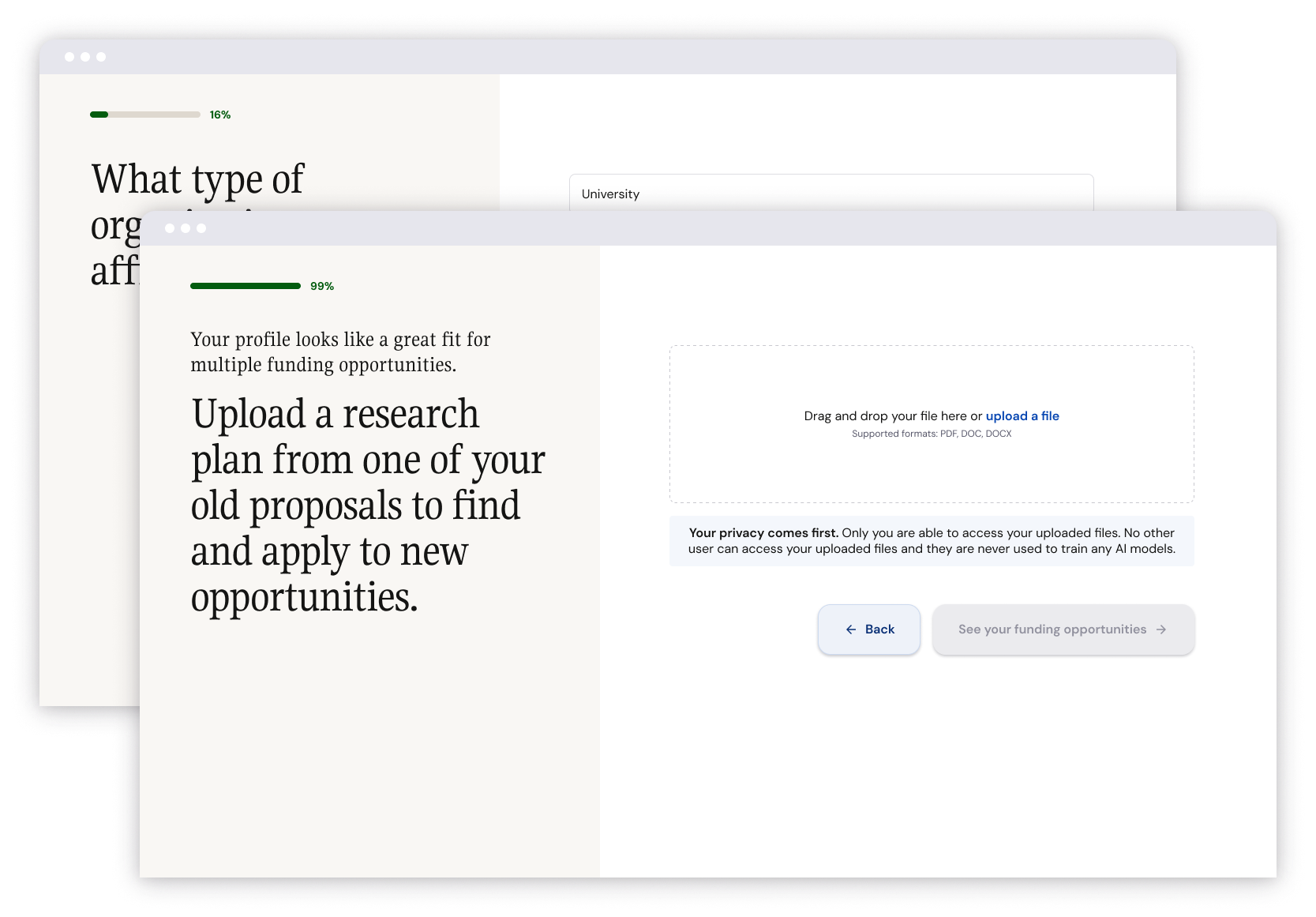

The experience starts with an onboarding question flow. Users are asked basic questions about their role, experience level, and research. Some of these questions are for our own edification, and some are used when we search for matches. Most importantly, the flow ends at an upload page where users provide a past research proposal. This proposal is the basis for the entire experience.

Onboarding did not exist in the original proof of concept. It was introduced to make the tool onboarding more self-service for our early beta users.

Onboarding question flow

Design updates for MVP

Proposal Writer

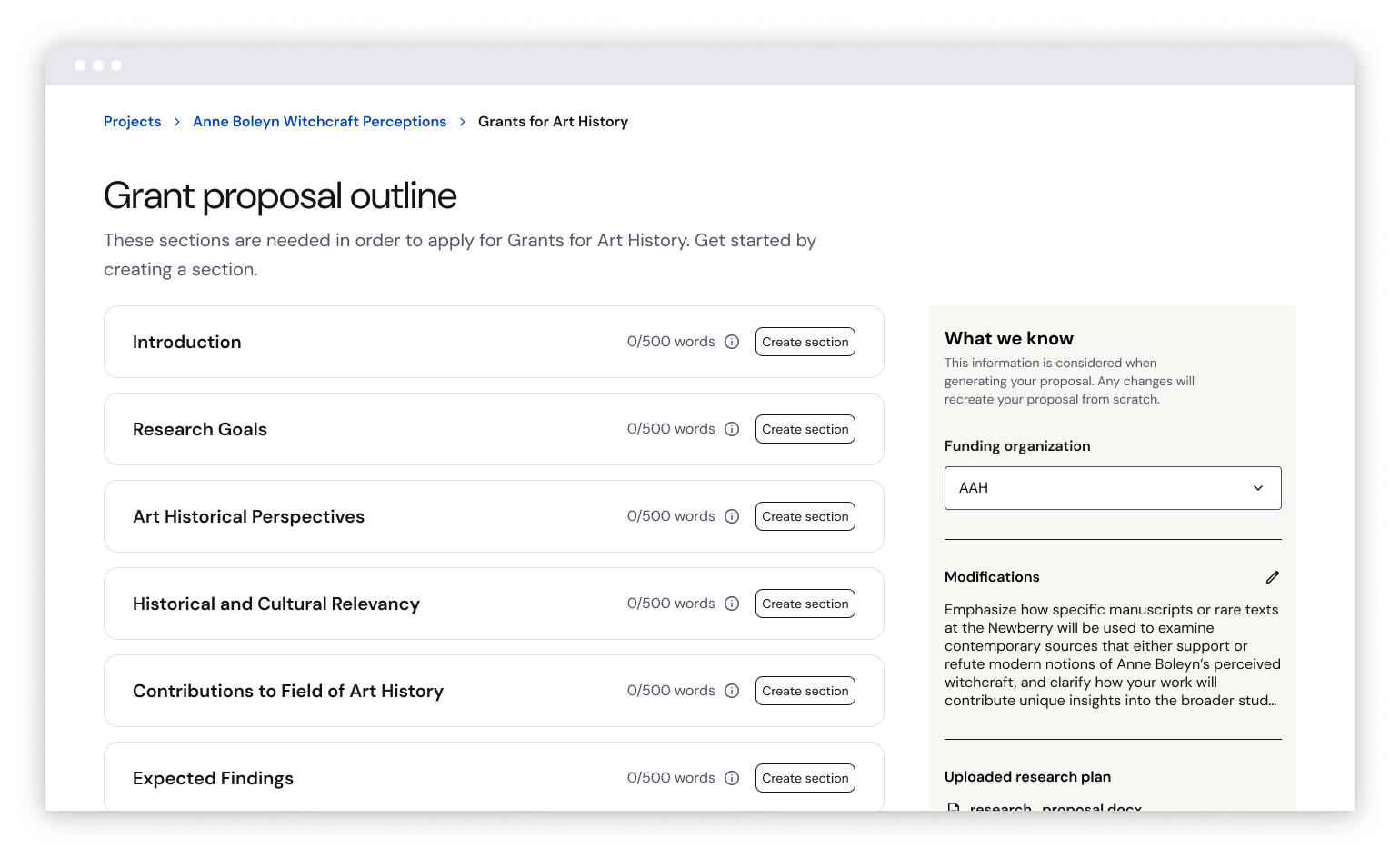

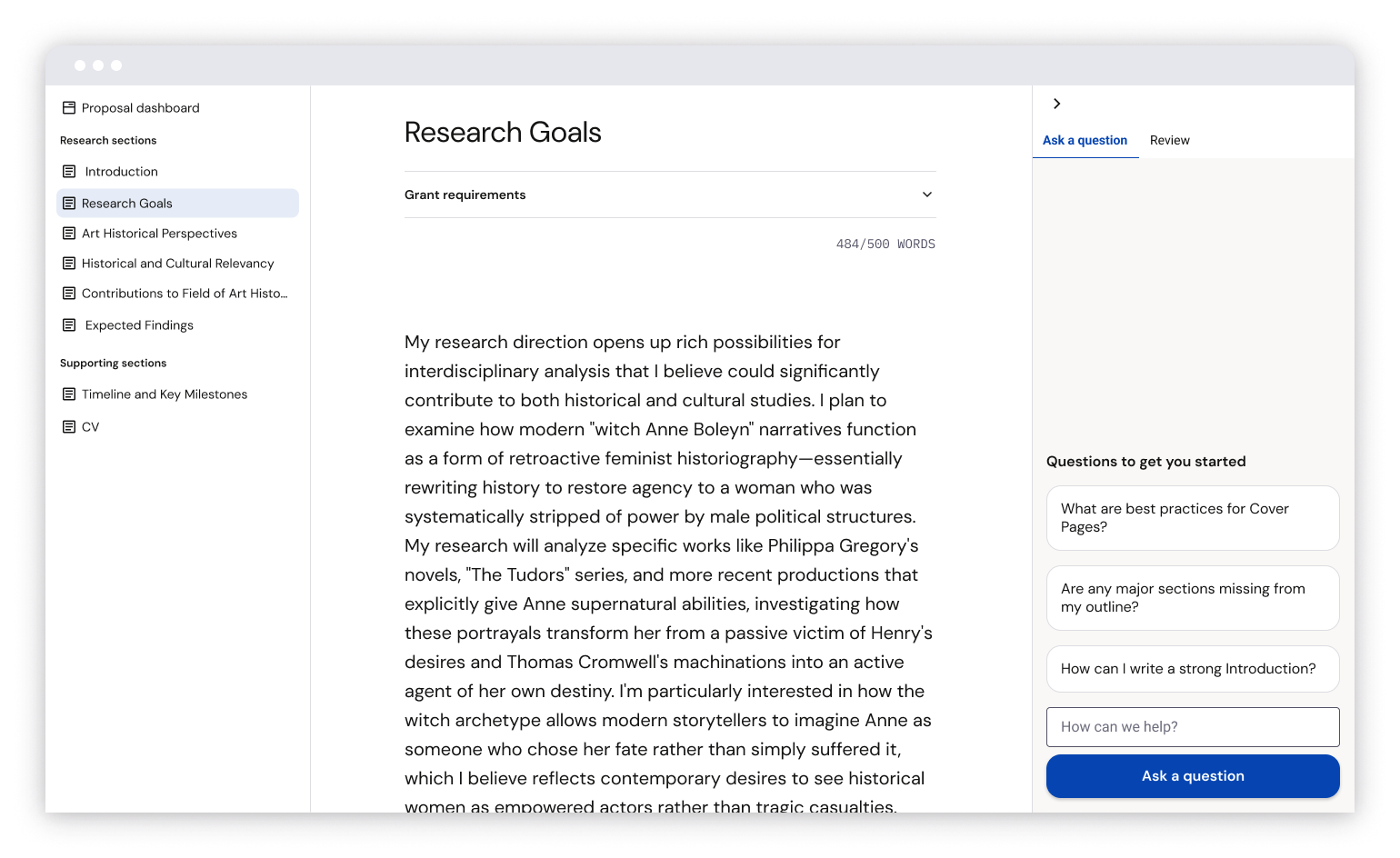

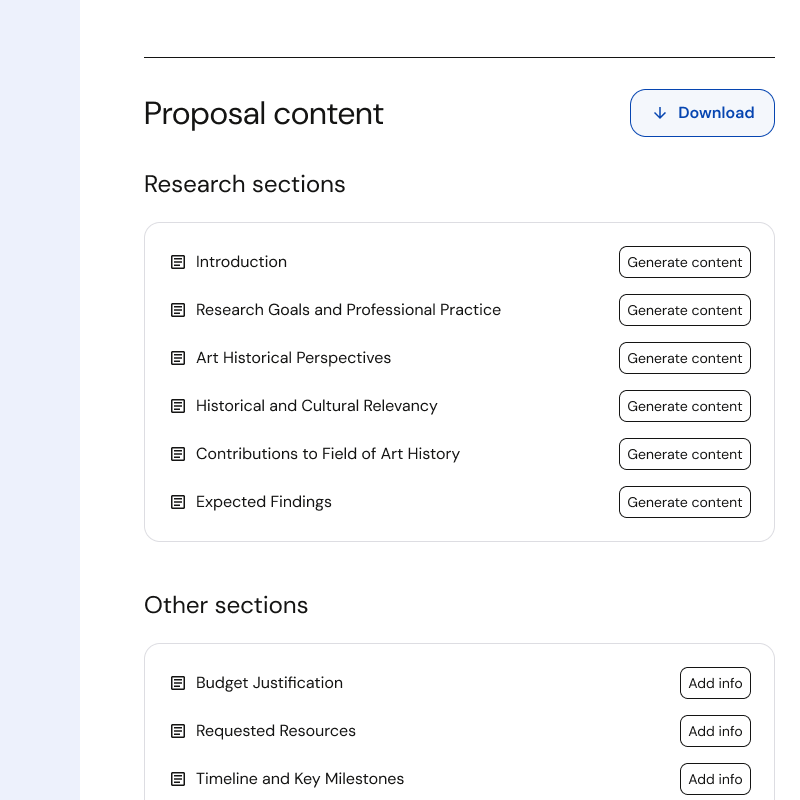

A proposal begins with an outline.

The Grants tool has expertise about what is required by major funders and understands the requirements for each funding opportunity. Funders can have specific section requirements, word count limits, and formatting requirements.

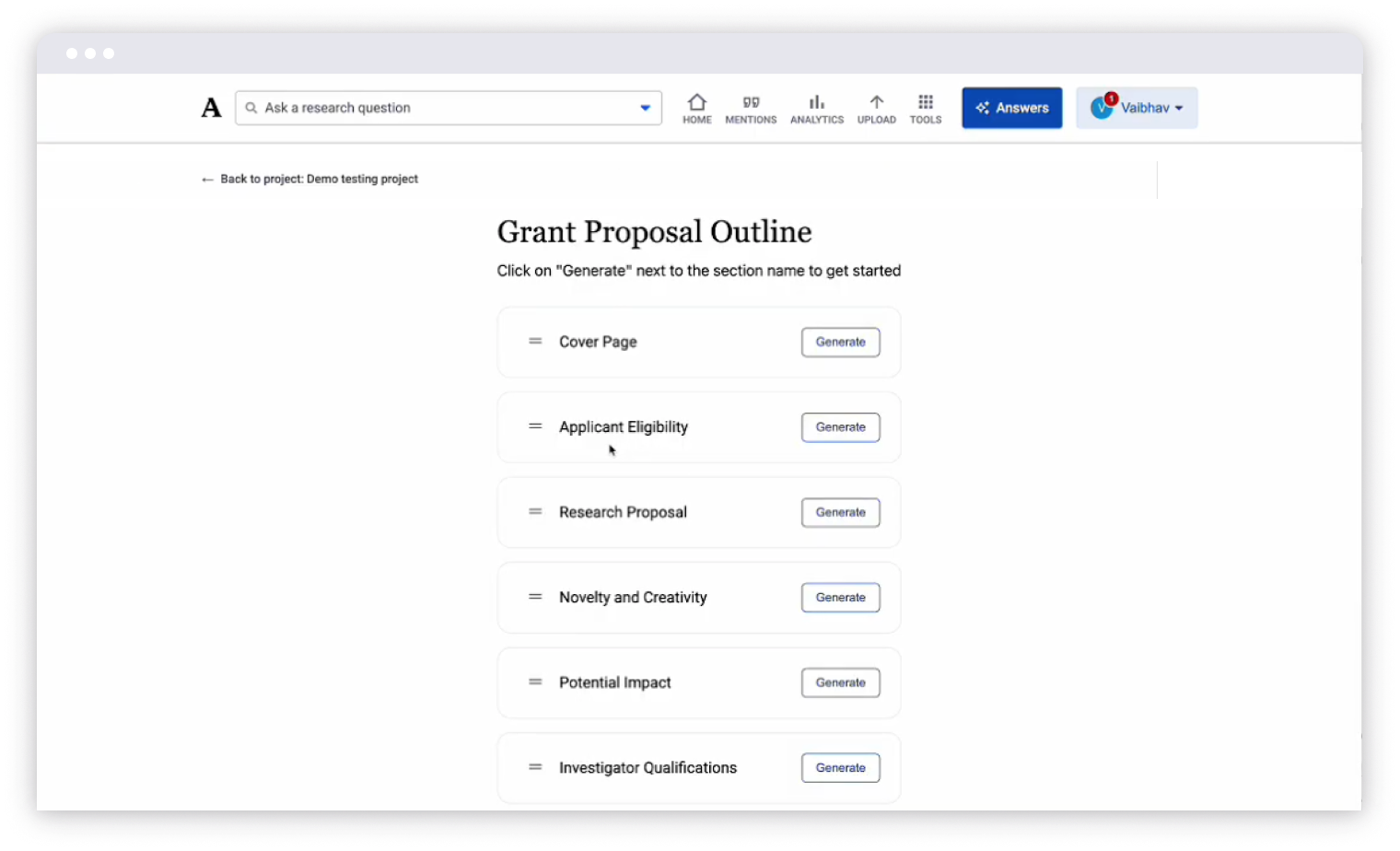

Original outline page in proof of concept

Original Writer page in proof of concept

Design updates for MVP

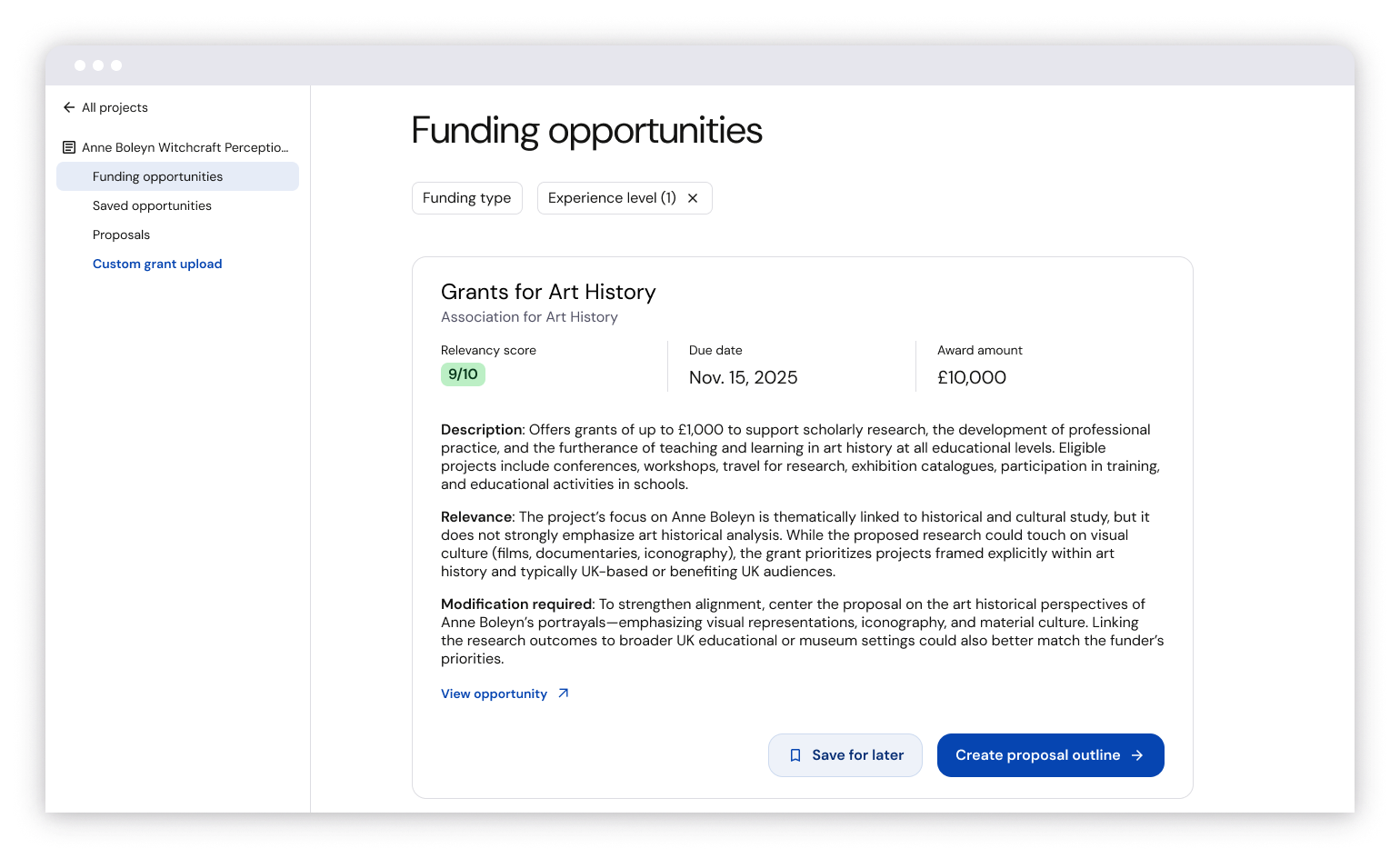

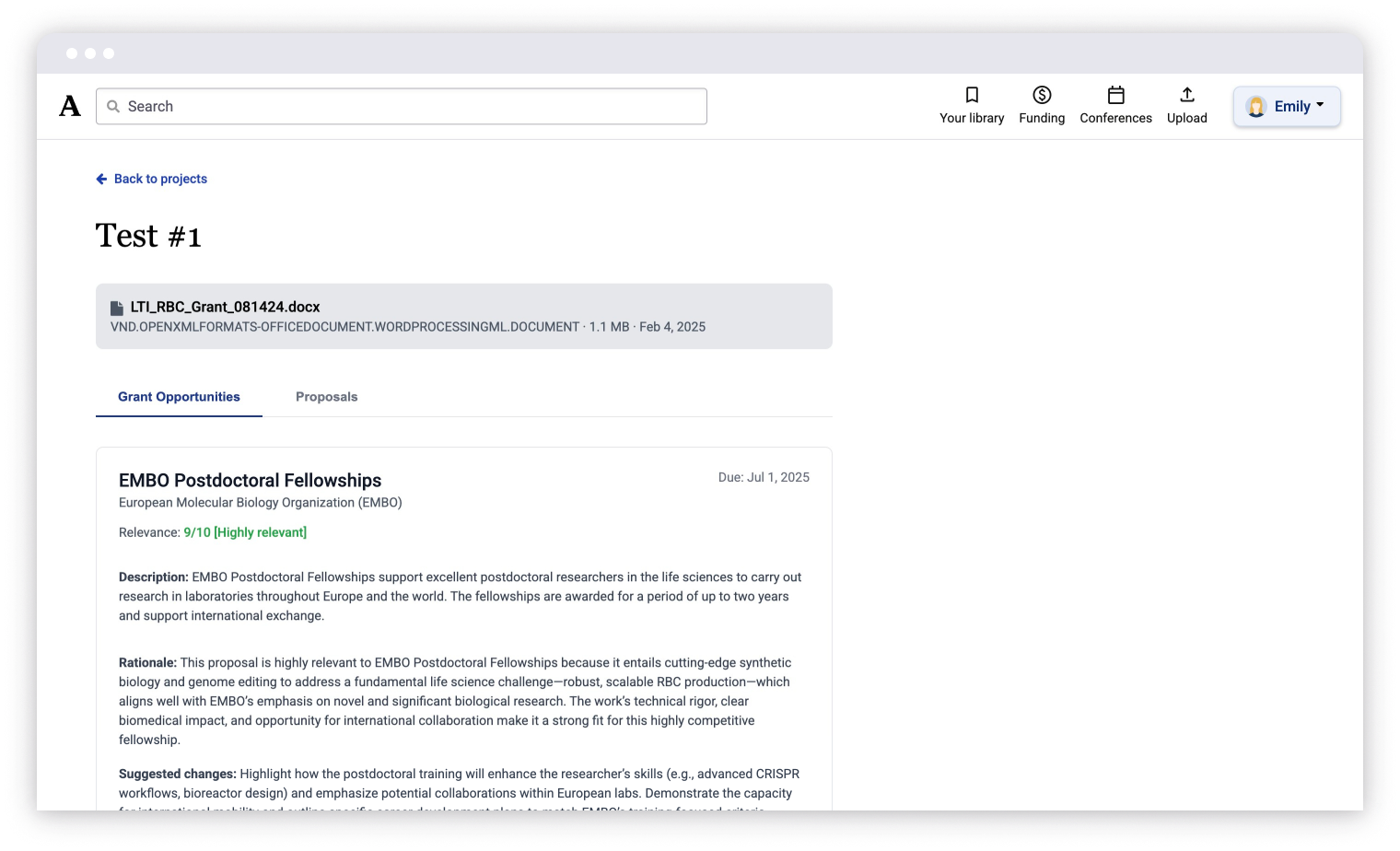

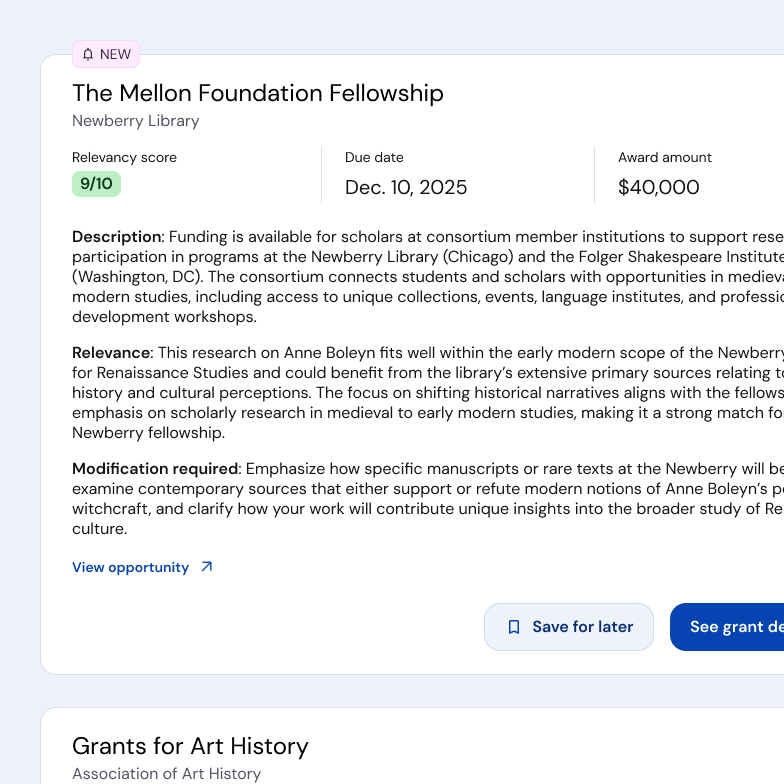

The Grant Opportunity Finder uses a person’s uploaded grant proposal to search for matching grant opportunities. Matches are scored based on relevancy. Included for each result is a description, an explanation for why the grant is relevant to their research, and a hint of what modifications would be needed to make their proposal ready for submission.

Grant Opportunity Finder

Original results page in proof of concept

Proposal outline

Design updates for MVP

All funding proposals require some level of customization. Sometimes the alterations can be small, like aligning the timeline and scope of work to the particulars of the award. Some funding opportunities dictate a specific methodology or outcome that requires a more substantive proposal rework. This is where the Proposal Writer’s value lies: it can help a researcher instantly understand the level of modifications expected and generate the corresponding content.

Users upload a previous research proposal and it is used as the basis for finding new funding opportunities.

We use a combination of vector and web search to find funding matches relevant to the research in the uploaded proposal.

Our system scores each match and surfaces reasoning and explanation for what would need to be modified for submission.

Based on the grant’s requirements, we generate an outline with all the sections required for submission.

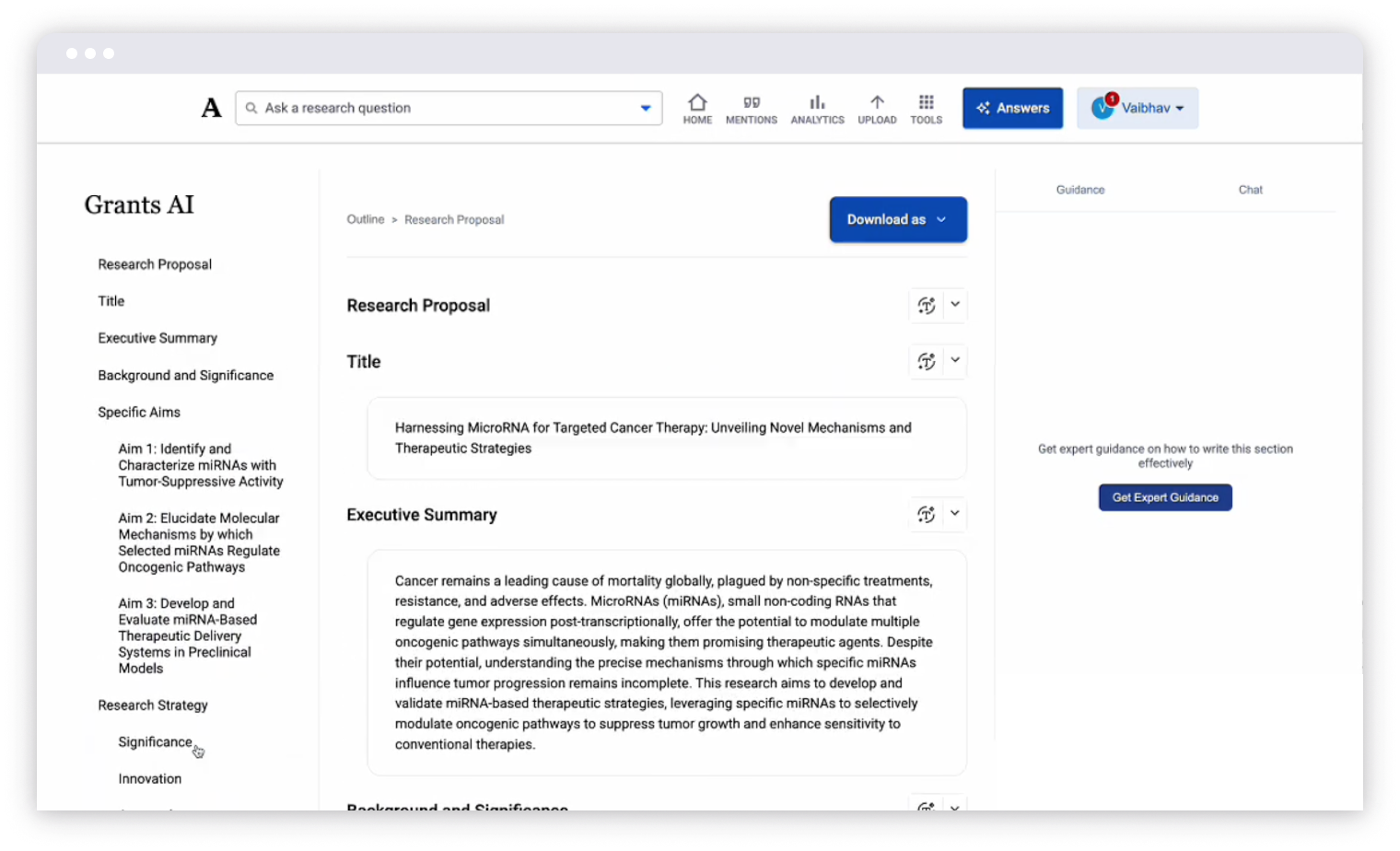

The first draft can be generated for each section using the original proposal content as the foundation.

In writing mode, users can self-edit or request changes to the generated content using the AI Grant Assistant.

Beta testing period

I joined the team after the initial proof of concept was developed during an internal AI hackathon. Our first project goal was to determine if we had product-market fit with our MVP. The beta testing period lasted 1 quarter.

We had 326 US users during our public beta.

Our first 50 user were invited and had white-glove onboarding via live demos.

We added a marketing landing page and self-serve onboarding flow as we opened up tool access to 20% of Academia’s US users.

Opened up beta even more to include ~75% of US users.

Finding product-market fit

We used qualitative and quantitative research to assess product-market fit with the current version.

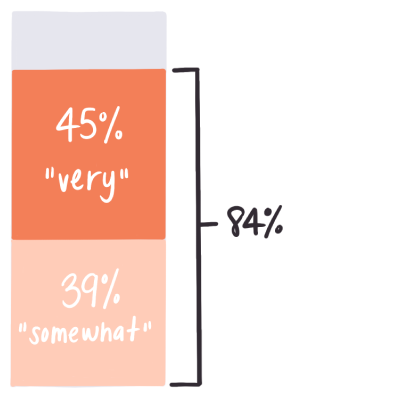

NPS survey

In a survey of our beta users 84% said they would be very disappointed or somewhat disappointed if the tool did not exist.

Opportunity finder

Our weekly Opportunity Finder emails that shares new grant matches sees strong engagement with a 14.2% CTR.

Proposal writer content

The generated proposal content was rated 4 or 5 stars by 93% of users, suggesting strong alignment with user expectations and needs.

Proposal writer engagement

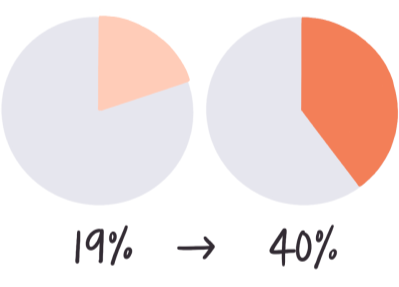

We also saw the percentage of users generating 2+ sections rise from 19% to 40%.